# 数据库高可用部署

[TOC]

# 1. 虚拟机相关信息

| 主机 | 地址 | 服务 | zookeeper角色 |

|---|---|---|---|

| b1 | 192.168.121.190 | postgresql + zookeeper | follower |

| b2 | 192.168.121.187 | postgresql + zookeeper + pgbouncer + patroni (主库) | leader |

| b3 | 192.168.121.189 | postgresql + zookeeper + pgbouncer + patroni (备库) | follower |

# 2. 版本信息

| 包名 | 版本 |

|---|---|

| CentOS | 7.9 |

| PostgreSQL | 10.5 |

| Pgbouncer | 1.8.1 |

| Zookeeper | 3.4.13 |

| Patroni | 1.4.4 |

注意:本部署方案已经完成了内核配置和数据库安装流程

# 3. 搭建主从数据库

在主库虚拟机上进行如下配置

# 3.1 huge pages配置

- 执行脚本

calc-hugePages.sh计算当前机器设置的页面数,脚本内容如下:

#!/bin/bash

pid=`head -1 $PGDATA/postmaster.pid`

echo "Pid: $pid"

peak=`grep ^VmPeak /proc/$pid/status | awk '{ print $2 }'`

echo "VmPeak: $peak kB"

hps=`grep ^Hugepagesize /proc/meminfo | awk '{ print $2 }'`

echo "Hugepagesize: $hps kB"

hp=$((peak/hps))

echo Set Huge Pages: $hp

- 将执行计算出的页面数设置到sysctl.conf中

echo "vm.nr_hugepages = 147">>/etc/sysctl.conf

- 查看是否设置成功

sysctl -p

- 修改数据库设置,开启对大页面的支持

vim $PGDATA/postgresql.conf

huge_pages = on

- 重启数据库

pg_ctl restart

# 3.2 配置流复制

主库配置

- 配置允许访问的ip

1. 切换目录

cd $PGDATA

2.备份pg_hba.conf文件

cp pg_hba.conf{,.bak}

3. 修改pg_hba.conf,修改pg_hba.conf参数,如主从复制的主库加入用户replicate、从库的ip(192.168.6.22/24)

vim pg_hba.conf

# 添加如下配置

host replication replicate 192.168.121.189/24 trust

4. 重启

pg_ctl restart

- 登录pg修改密码并创建用户

alter user postgres with password 'postgres';

create user replicate with replication password 'replicate';

从库配置

- 使用 pgbasebackup 工具生成备库

pg_basebackup -D /home/postgres/pgdata -R -Fp -Xs -v -P -h 192.168.6.52 -p 5432 -U replicate # pgdata目录修改为自己的机器上的位置

- 启动备数据库,需提前将配置文件中的huge_pages关闭

vim $PGDATA/postgresql.conf

huge_pages = off

- 启动数据库

pg_ctl start

- 运行

calc-hugePages.sh程序,同样和主库一样获取huge_pages的值,然后写入到sysctl.conf

./calc-hugePages.sh

echo "vm.nr_hugepages = 147">>/etc/sysctl.conf

- 查询是否添加成功

sysctl -p

- 重启数据库

pg_ctl restart

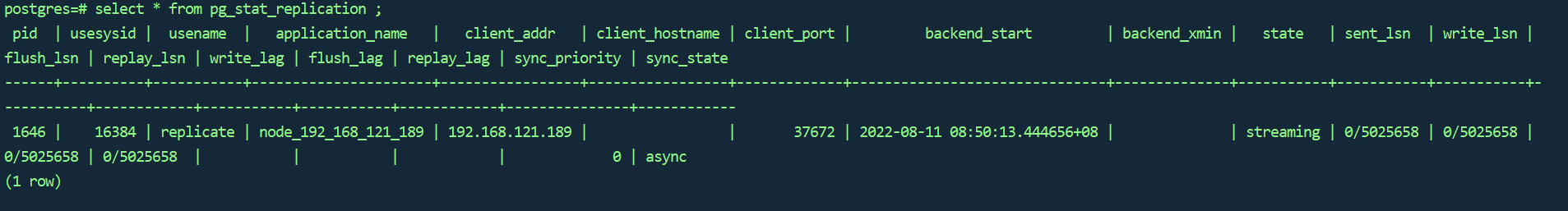

# 3.3 验证主从复制是否成功

主库登录检验

psql

select * from pg_stat_replication ; # 查看复制状态

# 4. 安装pgbouncer

# 4.1 部署情况

| 服务器ip | 部署情况 |

|---|---|

| 192.168.121.187(主库) | pgbouncer |

| 192.168.121.189(备库) | pgbouncer |

# 4.2 相关依赖

| 包名 | 版本 |

|---|---|

| libevent | 2.0.21 |

| pgbouncer | 1.8.1 |

# 4.3 安装libevent

在root用户下安装

1. 解压安装

mkdir /usr/libevent

tar -zvxf libevent-2.0.21-stable.tar.gz

cd libevent-2.0.21-stable

./configure --prefix=/usr/libevent

make -j4 && make install -j4

# 4.4 安装Pgbouncer

在postgres用户下安装

- 解压安装(在/home/postgres目录下)

tar -zvxf pgbouncer-1.8.1.tar.gz

cd pgbouncer-1.8.1

./configure --prefix=/home/postgres/pgbouncer --with-libevent=/usr/libevent/

make -j4 && make install -j4

- 手动创建log目录

mkdir /home/postgres/pgbouncer/log

3. 修改配置文件

vi /home/postgres/pgbouncer/share/doc/pgbouncer/pgbouncer.ini

[databases]

postgres = host=172.16.245.4 port=7432 user=postgres password=postgresql pool_size=20

postgres = host=172.16.245.5 port=7432 user=postgres password=postgresql pool_size=20

[pgbouncer]

listen_port = 6432

listen_addr = *

;unix_socket_dir = ''

;user = postgres

auth_type = md5

auth_file = /home/postgres/pgbouncer/share/doc/pgbouncer/userlist.txt

logfile = /home/postgres/pgbouncer/log/pgbouncer.log

pidfile = /home/postgres/pgbouncer/log/pgbouncer.pid

admin_users = postgres

stats_users = mon

pool_mode = session

client_idle_timeout=10

server_idle_timeout=1800

idle_transaction_timeout=10

client_login_timeout=10

server_reset_query = DEALLOCATE ALL;

server_check_query = select 1

server_check_delay = 10

reserve_pool_size = 5

reserve_pool_timeout = 5

max_client_conn = 50

default_pool_size = 20

log_connections = 0

log_disconnections = 0

log_pooler_errors = 1

ignore_startup_parameters = extra_float_digits

vi userlist.txt

"postgres" "postgresql"

- 启动服务

/home/postgres/pgbouncer/bin/pgbouncer -d pgbouncer/share/doc/pgbouncer/pgbouncer.ini

启动报错:pgbouncer: error while loading shared libraries: libevent-2.0.so.5: cannot open shared object file: No such file or directory解决方案如下:

# 添加至全局变量文件中

su -

vi /etc/profile

export LD_LIBRARY_PATH=/usr/libevent/lib:$LD_LIBRARY_PATH

source /etc/profile

# 或者添加至用户变量 .bash_profile

export PKG_CONFIG_PATH=/home/postgres/libevent/lib/pkgconfig

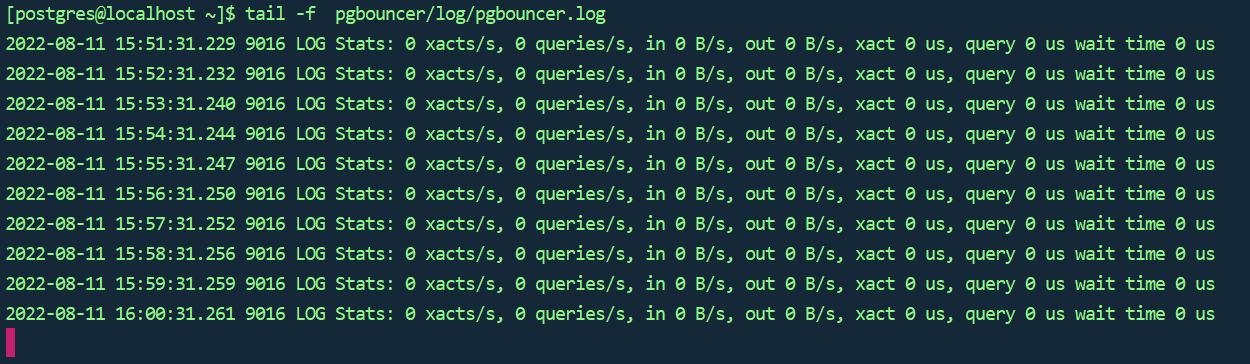

查看日志信息和服务器启动情况

日志信息如下:

tail -f pgbouncer/log/pgbouncer.log

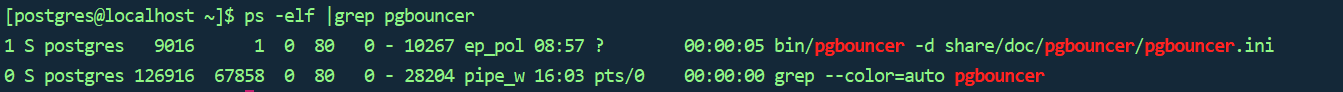

查看服务状态:ps -elf |grep pgbouncer

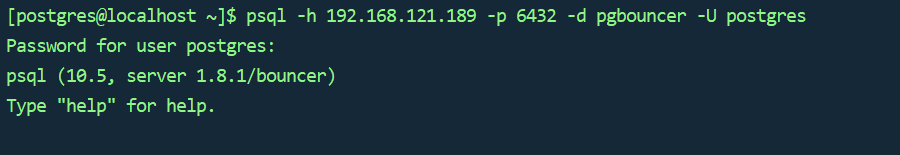

- 确认是否可以登录

psql -h 192.168.121.189 -p 6432 -d pgbouncer -U postgres

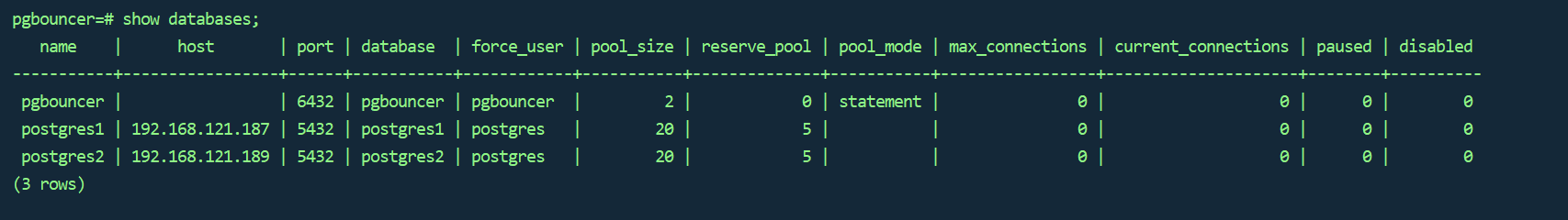

- 查看池中的数据库

show databases;

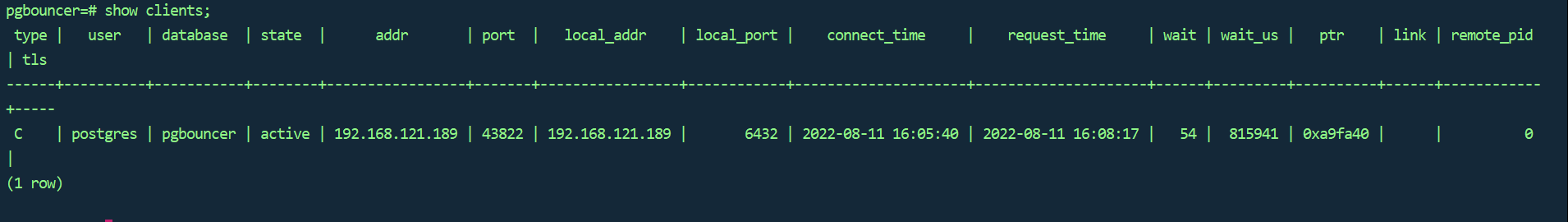

- 查看连接数

show clients;

# 5. 安装Zookeeper

# 5.1 部署情况

| 服务器ip | 模式 | myid |

|---|---|---|

| 192.168.121.187 | master | 3 |

| 192.168.121.189 | follower | 2 |

| 192.168.121.190 | follower | 1 |

# 5.2 下载路径及所装版本

| Zookeeper下载路径 | https://archive.apache.org/dist/zookeeper/zookeeper-3.4.13/zookeeper-3.4.13.tar.gz |

|---|---|

| Zookeeper 版本 | zookeeper-3.4.13.tar.gz |

| Java下载路径 | https://www.oracle.com/java/technologies/downloads/#java8 |

|---|---|

| Java 版本 | jdk-8u341-linux-x64.tar.gz |

# 5.3 安装Java环境

# 创建目录

mkdir /usr/java

# 解压

tar -zvxf jdk-8u341-linux-x64.tar.gz -C /usr/java/

# 添加环境变量

vi /etc/profile

#set java environment

JAVA_HOME=/usr/java/jdk1.8.0_341

JRE_HOME=/usr/java/jdk1.8.0_341/jre

CLASS_PATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar:$JRE_HOME/lib

PATH=$PATH:$JAVA_HOME/bin:$JRE_HOME/bin

export JAVA_HOME JRE_HOME CLASS_PATH PATH

# 重新加载环境变量

source /etc/profile

# 测试

java -version

# 5.4 解压安装

# 安装目录 /home/postgres/zookeeper-3.4.13

tar -zxvf zookeeper-3.4.13.tar.gz

# 拷贝zoo.cfg

cd /home/postgres/zookeeper-3.4.13/conf

cp zoo.sample.cfg zoo.cfg

# 修改配置文件

vim zoo.cfg

tickTime=2000

server.1 = 192.168.121.189:2888:3888

server.2 = 192.168.121.190:2888:3888

server.3 = 192.168.121.187:2888:3888

initLimit=10

syncLimit=5

dataDir=/home/postgres/zookeeper-3.4.13/data

clientPort=2181

# 创建 data log 目录

mkdir /home/postgres/zookeeper-3.4.13/data

mkdir /home/postgres/zookeeper-3.4.13/log

# 创建 myid 文件 值越高选取为leader的优先级也越高

# 在192.168.121.187(主库)中

echo 3 > /home/postgres/zookeeper-3.4.13/data/myid

# 在192.168.121.189(备库)中

echo 2 > /home/postgres/zookeeper-3.4.13/data/myid

# 在192.168.121.190中

echo 1 > /home/postgres/zookeeper-3.4.13/data/myid

# 为防火墙添加端口

firewall-cmd --permanent --add-port=2888/tcp

firewall-cmd --permanent --add-port=3888/tcp

firewall-cmd --permanent --add-port=2181/tcp

firewall-cmd --reload

# 启动服务 (需要三台服务器启动服务)

/home/postgres/zookeeper-3.4.13/bin/zkServer.sh start

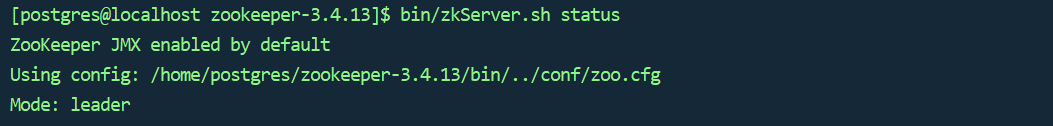

# 查看服务状态

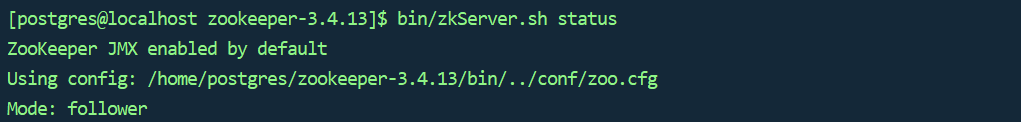

/home/postgres/zookeeper-3.4.13/bin/zkServer.sh status

# 停止服务

/home/postgres/zookeeper-3.4.13/bin/zkServer.sh stop

# 重启服务

/home/postgres/zookeeper-3.4.13/bin/zkServer.sh restart

* 日志请在运行启动命令的目录下查看zookeeper.out文件

安装完成后服务状态如下:

- 主库

- 从库

# 6. 安装Patroni

# 6.1 部署情况

| 服务器ip | 服务 |

|---|---|

| 192.168.121.187 | patroni |

| 192.168.121.189 | patroni |

# 6.2 相关依赖

| patroni下载地址 | https://codeload.github.com/zalando/patroni/zip/1de7c78c04dab9882f6113815d377e6f3490d5dd |

|---|---|

| patroni版本 | patroni-1.4.4.zip |

# 6.3 部署前准备

- 安装python相关包

yum install -y gcc epel-release

yum install -y python-pip python-psycopg2 python-devel

yum install -y python3-pip

yum install -y python36-devel

pip3 install -upgrade pip

- pip安装相关依赖

pip3 install urllib3 boto psycopg2 requests PyYAML six kazoo python-etcd python-consul click prettytable tzlocal python-dateutil psutil cdiff kubernetes psycopg2-binary patroni

在本步骤出现Time out报错的时候,大多数情况是因为网络原因导致的

# 6.4 解压安装

使用postgres用户进行解压安装

- wget下载的包名需要进行修改

wget https://codeload.github.com/zalando/patroni/zip/1de7c78c04dab9882f6113815d377e6f3490d5dd

mv 1de7c78c04dab9882f6113815d377e6f3490d5dd patroni-1.4.4.zip

2. 压缩格式为zip,需要安装unzip解压工具

yum install -y unzip

- 解压安装(路径为/home/postgres/)

# 解压

unzip partoni-1.4.4.zip

# 修改 postgres0.yml 配置文件

cd patroni-1.4.4.zip

vi postgres0.yml

scope: batmant1

#namespace: /service/

name: node_192_168_121_187

restapi:

listen: 192.168.121.187:8009

connect_address: 192.168.121.187:8009

# certfile: /etc/ssl/certs/ssl-cert-snakeoil.pem

# keyfile: /etc/ssl/private/ssl-cert-snakeoil.key

# authentication:

# username: username

# password: password

# ctl:

# insecure: false # Allow connections to SSL sites without certs

# certfile: /etc/ssl/certs/ssl-cert-snakeoil.pem

# cacert: /etc/ssl/certs/ssl-cacert-snakeoil.pem

zookeeper:

hosts: '192.168.121.187:2181,192.168.121.189:2181,192.168.121.190:2181'

bootstrap:

# this section will be written into Etcd:/<namespace>/<scope>/config after initializing new cluster

# and all other cluster members will use it as a `global configuration`

dcs:

ttl: 30

loop_wait: 10

retry_timeout: 10

maximum_lag_on_failover: 1048576

# master_start_timeout: 300

# synchronous_mode: false

# standby_cluster:

# host: 192.168.191.143

# port: 5432

# primary_slot_name: node_192_168_191_141_ss

postgresql:

use_pg_rewind: true

# use_slots: true

parameters:

# shared_preload_libraries: 'pg_pathman'

# wal_level: hot_standby

# hot_standby: "on"

wal_keep_segments: 8

max_wal_senders: 10

max_replication_slots: 10

# wal_log_hints: "on"

max_connections: 1000

archive_mode: "off"

# archive_timeout: 1800s

# archive_command: mkdir -p ../wal_archive && test ! -f ../wal_archive/%f && cp %p ../wal_archive/%f

# recovery_conf:

# restore_command: cp ../wal_archive/%f %p

# some desired options for 'initdb'

initdb: # Note: It needs to be a list (some options need values, others are switches)

- encoding: UTF8

- data-checksums

pg_hba: # Add following lines to pg_hba.conf after running 'initdb'

- host replication replicate 127.0.0.1/32 trust

- host all all 0.0.0.0/0 md5

- host replication all 192.168.121.189/32 trust

- host replication all 192.168.121.187/32 trust

# - hostssl all all 0.0.0.0/0 md5

# Additional script to be launched after initial cluster creation (will be passed the connection URL as parameter)

# post_init: /usr/local/bin/setup_cluster.sh

# Some additional users users which needs to be created after initializing new cluster

users:

admin:

password: admin

options:

- createrole

- createdb

postgresql:

listen: 0.0.0.0:5432

connect_address: 192.168.121.187:5432

data_dir: /data/pgsql/10.5/pgdata/

# bin_dir:

# config_dir:

pgpass: /home/postgres/.pgpass

authentication:

replication:

username: replicate

password: 'replicate'

superuser:

username: postgres

password: 'postgresql'

parameters:

unix_socket_directories: '/home/postgres'

#watchdog:

# mode: automatic # Allowed values: off, automatic, required

mode: off

# device: /dev/watchdog

# safety_margin: 5

tags:

nofailover: false

noloadbalance: false

clonefrom: false

nosync: false

# 6.5 启动Patroni服务

- 后台启动

nohup patroni postgres0.yml > postgres0.log 2>&1 &

- 查看服务状态

ps -ef | grep patroni

日志文件保存在postgres0.log中

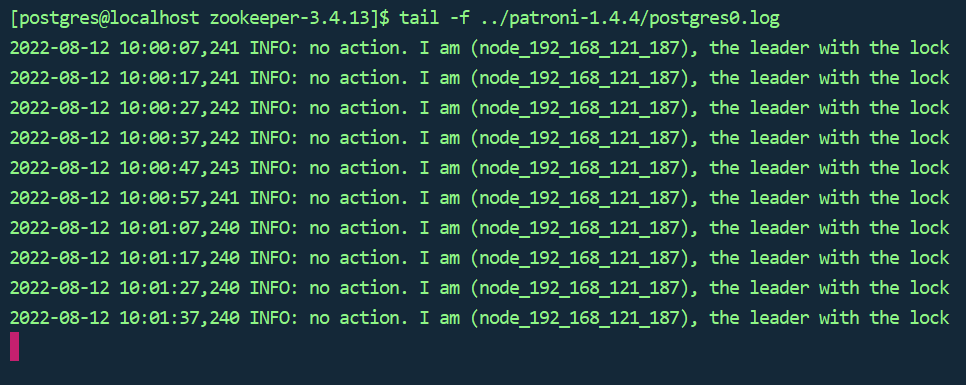

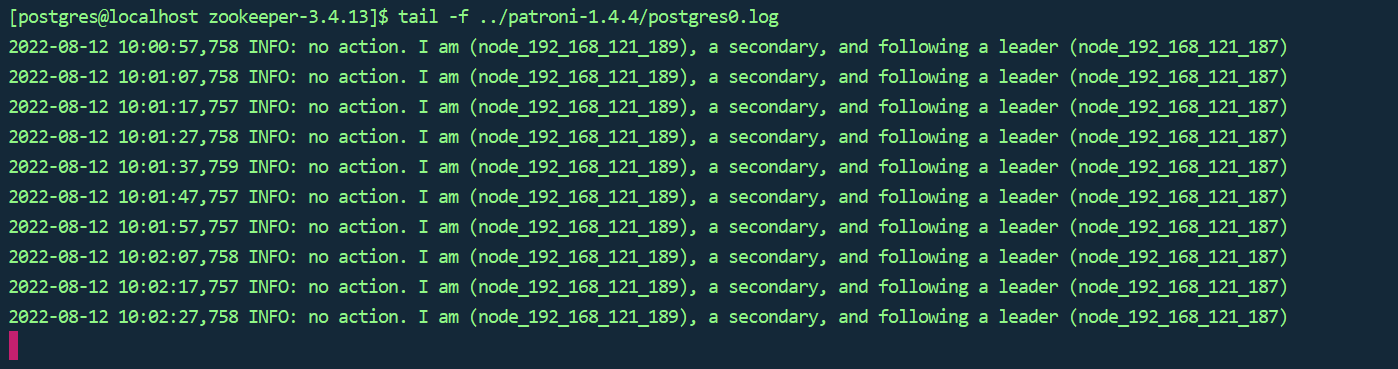

# 6.5.1 追踪日志信息

- 主库

- 备库

# 6.5.2 常见问题

在postgres0.log中可以看到启动及错误信息,

- 如出现refuse connection 首先检查配置文件中的服务端口8009是否在防护墙放行。

- 出现pg相关的报错检查pg数据库是否启动,配置文件中的相关信息是否正确。

- 出现python相关的报错检查相关依赖是否安装和升级。

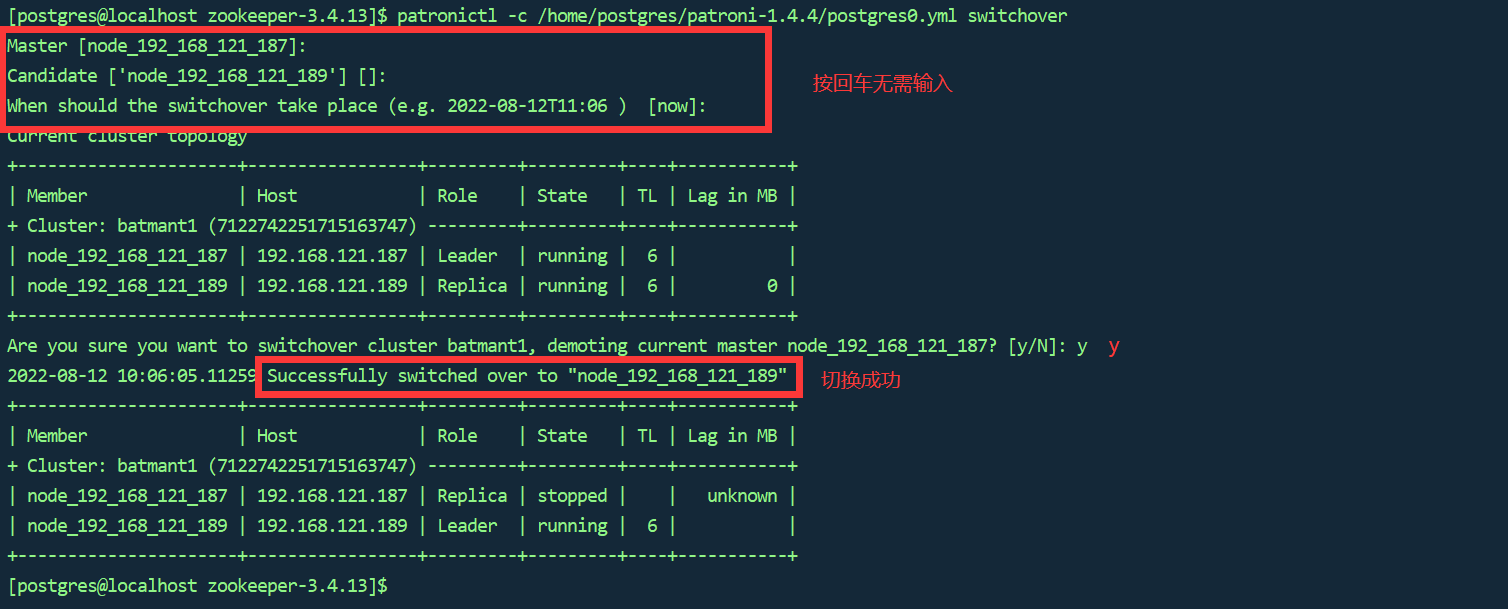

# 6.6 Patroni 主备切换

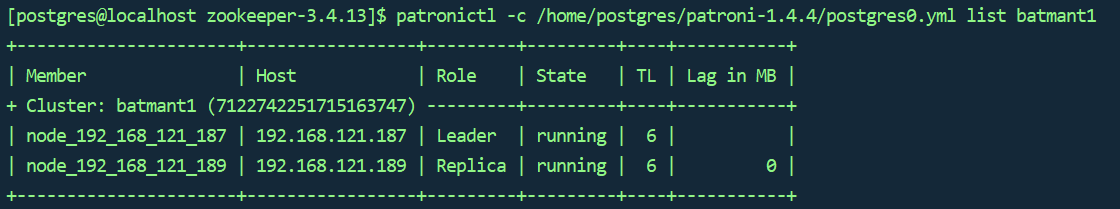

# 6.6.1 查看patroni主备信息

patronictl -c /home/postgres/patroni-1.4.4/postgres0.yml list batmant1

# 6.6.2 手动进行主备切换

patronictl -c /home/postgres/patroni-1.4.4/postgres0.yml switchover

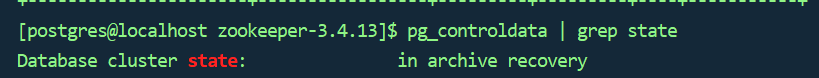

# 6.2.3 查看切换信息

- 查看主库的状态(已经转为备库)

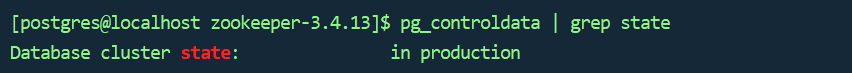

pg_controldata | grep state

- 查看备库的状态(已经转为主库)